There are countless methods of copying data from one database to another, however, here we present a toul that performs this operation in a very flexible and efficient way.

This article shows how to use SqlBulkCopy on a .Net core project. Using SqlBulkInsert.

We can insert a few millions of rows in a few seconds using SqlBulkCopy . If you use INSERT statements, you'll need a few minutes. To not use too much memory, you can stream the data to the database by creating your own DbDataReader.

|

CREATE TABLE [dbo].[Profile](

[Id] [int] IDENTITY(1,1) NOT NULL,

[FirstName] [nvarchar](50) NULL,

[LastName] [nvarchar](50) NULL,

[Email] [nvarchar](50) NULL,

[DateOfBirth] [datetime2](7) NULL

)

|

SqlBulkCopy is a class in the System.Data.SqlClient namespace that allows you to insert into SQLServer in a very efficient manner.

We can point SqlBulkCopy to the SQL Server table in our database and then call the WriteToServer method with a IDataReader, DataTable, DataRows.

|

// SQL server connection.

// datatable to store data in a table.

using (SqlBulkCopy bulkCopy = new SqlBulkCopy(connection))

{

bulkCopy.DestinationTableName = "tablename";

// Number of rows to send to the database

bulkCopy.BatchSize = myDataTable.Rows.Count;

// Store the data to the table

bulkCopy.WriteToServer(datatable);

bulkCopy.Close();

}

|

Project Setup

We need to create a three projects:

The main project that called SqlBulkCopy

1. The project for business logic is called SqlBulkCopyBusinessLayer.

2. The project to create a model and DbContext where we can perform the SQL operations is called SqlBulkCopyDataLayer.

To start, we will create an ASP.NET Core 6.0 web Application with a project type of web API and call it SqlBulkCopy.

To test an insert of many entities at once we need to generate testing data.We will use a Bogus nugget package to generate fake data.

Bogus package is easy to use fake data generators. It will generate random values that will fit a given class, like a firstname,lastname, email etc.

Require packages:

1.SqlBulkCopy

Install-Package Microsoft.EntityFrameworkCore.Design-Version 6.0.3

2. SqlBulkCopyBusinessLayer

Install-Package Bogus -Version 34.0.1

Install-Package Microsoft.Extensions.Configuration.Abstractions -Version 6.0.0

Install-Package System.Data.SqlClient-Version 4.8.3

3. SqlBulkCopyDataLayer

Install-Package Microsoft.EntityFrameworkCore.Design-Version 6.0.3

Install-Package Microsoft.EntityFrameworkCore.SqlServer-Version 6.0.3

Next for the project setup we need to add a reference to the SqlBulkCopyDataLayer project in our SqlBulkCopyBusinessLayer project. And add a reference to the SqlBulkCopyBusinessLayer in our SqlBulkCopy project. The Final Setup will look like this:

Set up the connection string in the appsettings.json file.

Inserting data with Entity Framework Core

For demonstration, we will generate Profiles data in the service method instead of sending from the request and insert it right after that.

Generate an Application DB context class.

|

dotnet ef dbcontext scaffuld "Server=your server name;User Id=yourid;Password=yourpassword;Database=your database name;" Microsoft.EntityFrameworkCore.SqlServer -f -o BulkCopy -c AppDbContext -t Profile

|

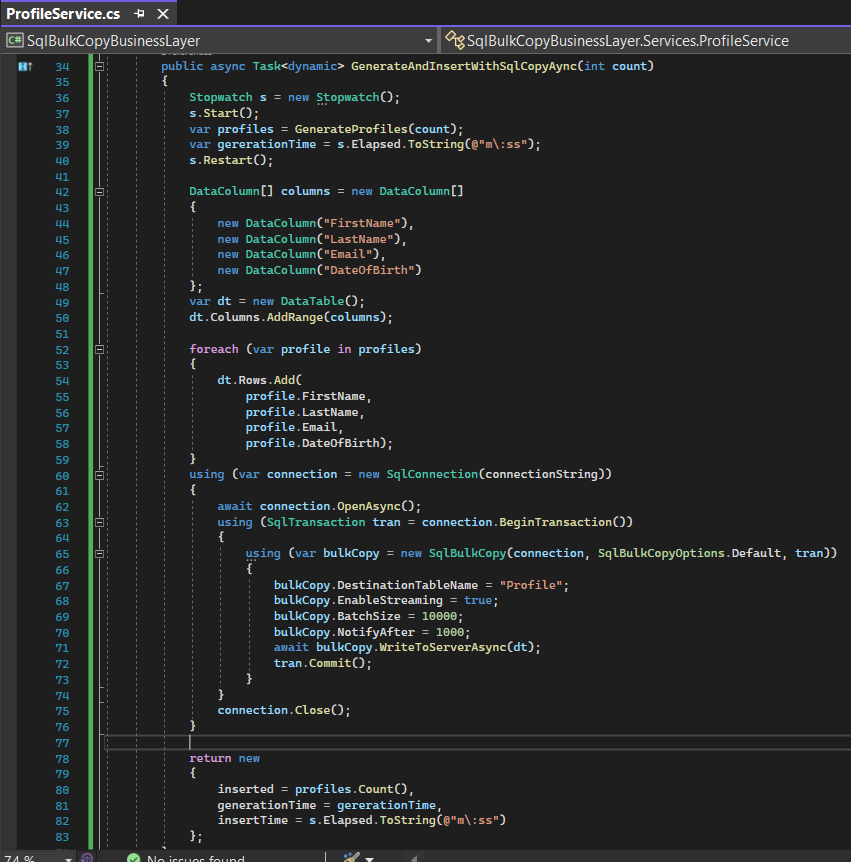

Create a service file ProfileService in which we can add the methods to perform bulk insert using SqlBulkCopy.

Here we will add a Stopwatch to measure how long it takes to generate data as well as insert them. And we will return an anonymous type to see the result.

Inserting data with SqlBulkCopy

First, we need to define a Datatable. It needs to represent the Profiles table because this is our destination table that we are going to use. Then by using WriteToServerAsync we are loading data to the database.

SqlBulkCopy does not create a data file. It streams the data table directly from the .Net DataTable to the server using the available communication protocul and inserts the data to the destination table in bulk.

The final step is to create a simple endpoint that will call the ProfileService to call bulk insert.

And that's enough. Now, you can test the code. Press F5. and goto https://localhost:7173/swagger/index.html

Entity Framework output

SqlBulkCopy output

As you can see the difference it both the output to insert 100000 profiles:

|

Entity Framework

|

7 min 31 sec

|

|

SqlBulkCopy

|

0.47 sec

|

Thank you for reading…